I Vibe Coded an AI System to Write Substacks about AI

Or What Happens When We Magically Introduce Complexity We Don't Understand?

I’m experimenting with AI to translate my posts. Check out “I Vibe Coded an AI System to Write Substacks about AI” in: العربية | Português | Bahasa Indonesia | 简体中文 | हिन्दी | Français | Español.

TL;DR

I tried vibe coding a Retrieval-Augmented Generation (RAG) system to write my Substack posts. It sort of worked. Which made me wonder: What happens when we can build functioning systems without understanding how? This post explores the tension between magic and comprehension and what it might imply for software, markets, and beyond.

Introduction

One of the most fascinating aspects of AI is its coding ability (the Claude 4 coding demo from a few days ago is worth watching!).

This capability and its application are colloquially known as “vibe coding.”

This week, I carried out an experiment in vibe coding to understand what the excitement was all about.

What is vibe coding? It consists of creating software with AI, guided by your intuition and ideas, and then refining it iteratively with sequential prompts.

It's magic.

My goal wasn’t to build software per se (though that is interesting too); rather, I wanted to understand what it might mean if everyone had this power at their disposal.

That is, what does the advent of vibe coding mean for how we build products, companies, and society as a whole?

Using AI to Write a Substack about AI

First, I needed something to vibe code.

It seemed that vibe coding and my sabbatical goal of writing Substack posts about AI were a perfect combination to experiment with.

What if I didn’t have to write my posts, but instead built a system to write them all for me?

I’m not talking about just telling ChatGPT to write a post about topic X, but actually building a retrieval-augmented generation system (RAG) that would take in current headlines from news, social media, academic articles, and whatever else, then write a complete article for me, like me.

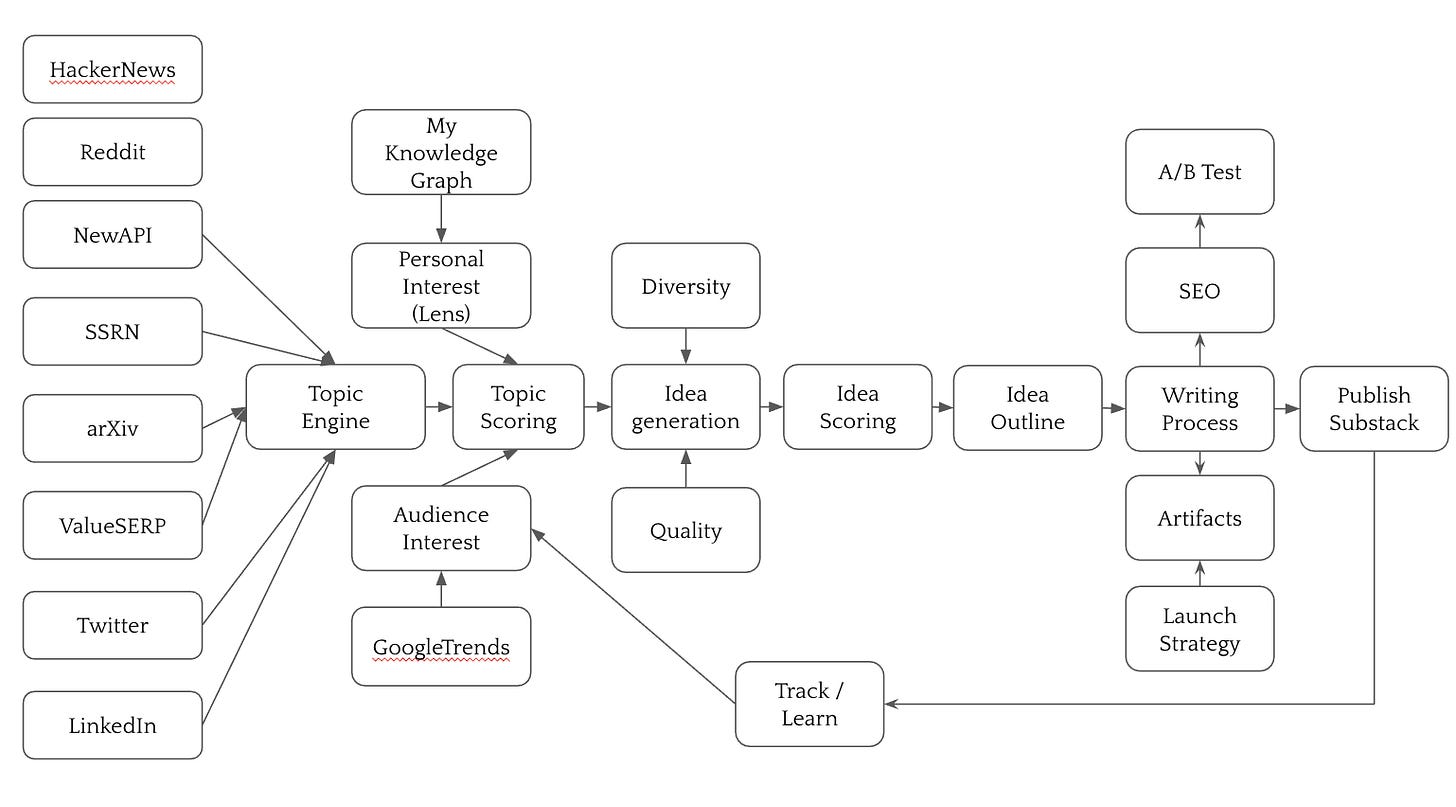

So, taking my previous post on Patchwork AI seriously and building on the idea that AI will be integrated into workflows, I thought I would begin with a comprehensive schematic of what a generative AI system for writing Substack posts for superadditive.co would look like.

Ingestion and Inspiration

So, there would be multiple layers of code, a mix of ingestion from APIs that would bring in content from across the internet, including Hacker News, Reddit, arXiv, etc.

This content would feed into a topic engine. This engine would then evaluate each piece of content based on my personal lens, audience interest, and then score each possible topic for relevance.

Idea Generation and Evaluation

Ideas would be generated with a focus on diversity and quality.

I don’t know exactly how, but it would happen.

Writing

These ideas would then be scored using some rubric, and the full idea for the substack article would be outlined.

A writing process would ensue. My agent minions would auto-generate SEO and A/B tests and input some aspects of my strategy and artifacts to write a more personalized post.

Launch and Feedback

Then, I would publish on Substack, learn from user engagement, and update my strategy and tactics.

Let’s Start Vibe Coding

Okay, I was going a little wild here as I thought through what I could possibly do. I’m not going to spend all this time building this big system because I wouldn’t want to read those Substacks anyway (at least I don’t think I would want to read this).

However, it was helpful to get those ideas out there.

I’m going to build a much simpler system using a free API, specifically the arXiv API, and then use my OpenAI subscription to build the RAG system.

Prompt 1: Build Me a RAG System for Substack Posts

1. I would like to pull recent articles from the archive with an API for a given keyword that I choose. 2. I would like to save these into a csv file. 3. I would like to cluster the articles into 5 topics. 4. Using the text of the articles in the topic, I would like to have open AI generate ideas for 10 possible substack post ideas for each topic. The posts should be related to innovation, economics, industrial organization, organization theory, entrepreneurship. 5. The ideas should be output into a txt file with the relevant articles in the cluster that was just described at the bottom, with a link to the original articles. 6. Here is my openai API: "OPEN AI API GOES HERE"

http://export.arxiv.org/api/query?search_query=ti:%22generative%20ai%22&sortBy=submittedDate&sortOrder=descending&max_results=100 The output is:

<?xml version="1.0" encoding="UTF-8"?> <feed xmlns="http://www.w3.org/2005/Atom"> <link href="http://arxiv.org/api/query?search_query%3Dti%3A%22generative%20ai%22%26id_list%3D%26start%3D0%26max_results%3D30" rel="self" type="application/atom+xml"/> <title type="html">ArXiv Query: search_query=ti:"generative ai"&id_list=&start=0&max_results=30</title> <id>http://arxiv.org/api/AVnAV8tSzmKVQVQnaeljoO7C0ec</id> <updated>2025-05-13T00:00:00-04:00</updated> <opensearch:totalR

…..

….

>

At first, it just generated the output for me.

I wanted the code (typo included for accurate representation of my process)!

i just want code generated to do these tasks, with each step explainePrompt 2: Make it Modular

ChatGPT produced lots of code. It was hard for me to process it with “vibes.”

So, I asked it to make the code a bit more modular so I could scan it for understanding.

i'd like to make the code more modular. In particular, I would like to separate out the prompt so that it can be better.Actually, re-reading this prompt, I realized that I made no sense. But it did a pretty good job turning my wish into modular code…

Now I have my MVP code for my Superadditive.co substack post generator.

Okay, this is pretty cool. Will it work? Let me try it out in Google Colab and see if it works.

Post 1.0: Cringe!

Here’s the introduction for the Substack it produced. If you want to read the entire piece, you can scroll to the bottom.

Imagine yourself in a bustling city, surrounded by intricate networks of infrastructure, each system functioning autonomously yet interdependently. This sprawling urban landscape represents the complexity of our current sociotechnical systems, where artificial intelligence (AI) has become an integral part of our daily lives, just like the electric grid or public transportation. Yet, as we increasingly rely on these AI systems, we're confronted with a paradox: the very technology that promises to improve our lives also brings with it new challenges and risks. This article explores the rise of Generative Artificial Intelligence (GenAI), a powerful new technology that is revolutionizing industries but also raising concerns about accountability, security, and education.

Nevertheless, this was magic. Wingardium Leviosa.

The output is not good. I would not read it or encourage anyone to read it because it is not based on any real “idea.”

However, as a minimum viable product, it’s promising.

So far, I know my prompting stinks. But that is what makes it even more impressive.

It’s a vibe.

Prompt 3: Adding Personalization

Overall, it's very cool that ChatGPT could build this so fast. The content is pretty generic and uninspired, even though it was generated from recent research pulled from arXiv.1

I decided to vibe code my way to a slightly better setup.

One idea I had was that to make it more personalized, I should probably tell the system something about myself. Otherwise, of course, it's going to produce something generic. So, I asked it to incorporate my background into the post. I uploaded my CV and pasted the code that came after Prompt 2.

i'd like to add a function that injects my personality into the writing of the substack. Here is my CV.It generated the code and told me to include this piece of code that would incorporate my personality into the writing.

def personalize_voice(base_prompt: str, personality_descriptor: str = None) -> str:

"""Injects a personal writing voice into the base Substack prompt."""

default_descriptor = (

"You are Sharique Hasan, a professor of strategy and sociology who blends deep academic rigor "

"with accessible storytelling. Your writing style is reflective, often drawing from personal experience, "

"field experiments, and empirical work to illustrate insights. You connect theory to practice with clarity, "

"emphasizing real-world implications, especially in entrepreneurship, innovation, and social networks."

)

descriptor = personality_descriptor if personality_descriptor else default_descriptor

personalized_prompt = base_prompt.replace(

"You are a Substack writer specializing in",

f"{descriptor}\n\nYou are writing a Substack article specializing in"

)

return personalized_promptprompt = format_substack_prompt(cluster_id, summaries)base_prompt = format_substack_prompt(cluster_id, summaries)

prompt = personalize_voice(base_prompt)But I had no clue where to paste it in, and frankly, I was too lazy to understand what was going on. So, I asked it to do it for me with two words.

full codeYay! It produced the full code.

I ran the code again, and it still produced pretty poor, and in this case, quite cringeworthy writing.

### Substack Post for Cluster 1

**Opening Paragraph**

As I stroll through San Francisco, a city I call home, I can't help but notice the intricate urban layout that encapsulates its history, culture, and aspirations. The serpentine roads, the Victorian houses, and the steel-and-glass skyscrapers all speak to the city's past and future. Each architectural detail, each junction, and each open space is a testament to meticulous urban planning. But with the dawn of the AI era, we are witnessing a paradigm shift in how cities are designed and planned. Could generative AI redefine urban planning, and what are the implications for society at large?

I thought maybe the problem was that I was using the old model, GPT-4, so I asked it to upgrade to the best model available. It didn’t. It used 4-turbo. I wanted 04-mini, so I did it myself.

can you upgrade the chatgpt model to the best model out there. # ========= GENERATE BLOG POST =========

def generate_post_ideas(summaries, cluster_id, openai_client):

base_prompt = format_substack_prompt(cluster_id, summaries)

prompt = personalize_voice(base_prompt)

response = openai_client.chat.completions.create(

model="o4-mini-2025-04-16", # Updated to latest best model

messages=[{"role": "user", "content": prompt}],

temperature=1

)

return response.choices[0].message.contentThe writing in this version was way better. Frankly, it scared me because it started sounding like me. However, the story is NOT true.

Last spring, I found myself in a small conference room at a community center in rural Virginia, listening to a group of farmers debate whether ultraviolet light could cure COVID-19. I’d been invited to speak about information flows in crisis moments, but the talk evolved into an impromptu fact-checking workshop: we sifted through Facebook posts, dissected forwarded audio clips, and traced dubious medical claims back to satirical news sites. One farmer confided he’d always trusted what a neighbor shared on WhatsApp. When I explained how generative AI can spin entirely new, but patently false, stories at the click of a button, his eyes widened. We’d crossed a threshold: misinformation had ceased to be an accident of rumor; it was now a technologically amplified phenomenon, blurring the line between honest mistake and deliberate manipulation.

Prompt 4: Making it Sound More Like Me

Frankly, though, the full post wasn’t something I would be interested in reading because it still lacked an element that I couldn’t really put my finger on.

Here is a real substack that I wrote (first one) followed by an autogenerated substack with a RAG system I vibe coded. Give me some ideas about how could modify the code below to get the AI generated post, to have the same kind of feel and interest to the human one. It suggested more stuff, including:

🗣️ Inject Real Voice into Output via Style Transfer + Memories

🤯 Include a “contrarian move” requirement in the prompt

📉 Add a ‘Meta-commentary’ Layer

📚 Make the system rate its sources for emotional or intellectual resonance

🧵 Encourage narrative scaffolding in the blog post generation

🪞 Don’t simulate your voice — simulate your process

✨ Final Thought

Your experiment is valuable not because it automates your Substack, but because it reveals what makes your writing irreplaceably human: recursive thought, lived stakes, intellectual humility, and a sense of friction between knowing and not knowing.

In other words, vibe coding can mimic your structure.

But not your struggle.

And your struggle is the content.

Would you like help rewriting your AI prompt based on the feedback above — perhaps as a new modular template?

What I Learned Through This Experiment

I’m sure these other modules could be added. I didn’t incorporate them because I learned something during this activity that I thought was worth thinking through a bit more than creating a fully functional system to replace me in writing Substack posts about AI.

First, let me take a moment to reflect on what I learned about the quality of the posts generated by AI through this process.

Currently, the post the AI has written is not very readable.

I’m pretty sure that with better prompts, more modules, and more data sources, the RAG system could create a very interesting post that I wouldn’t mind reading.

But it wouldn’t be my writing.

With all the caveats, there is real economic power in the vibe-coded RAG system “I built” in just a few minutes.

When Thought Becomes Software

I started this post with an idea. Could I replace myself with a stream of generative AI code to write Substacks for me while I enjoy piña coladas all summer long, collecting likes and subscribers without doing anything?

However, what startled me was how I managed to achieve all this without fully understanding what I was doing. The outcome turned out to be much more complex than I realized.

Of course, I could invest time in grasping this complexity, but adding features and solving real problems is far more exciting than fully understanding my actions.

The ability to introduce complexity that I didn’t understand with a few missives is awe-inspiring. But what are the consequences?

The Danger of Uncomprehended Complexity

Software is everywhere. It powers our cars, sets our toasters, orchestrates activities on the cloud, and tracks our movements through our watches. Everywhere. What are the consequences of rapidly proliferating software in the world? Software that we don't understand.

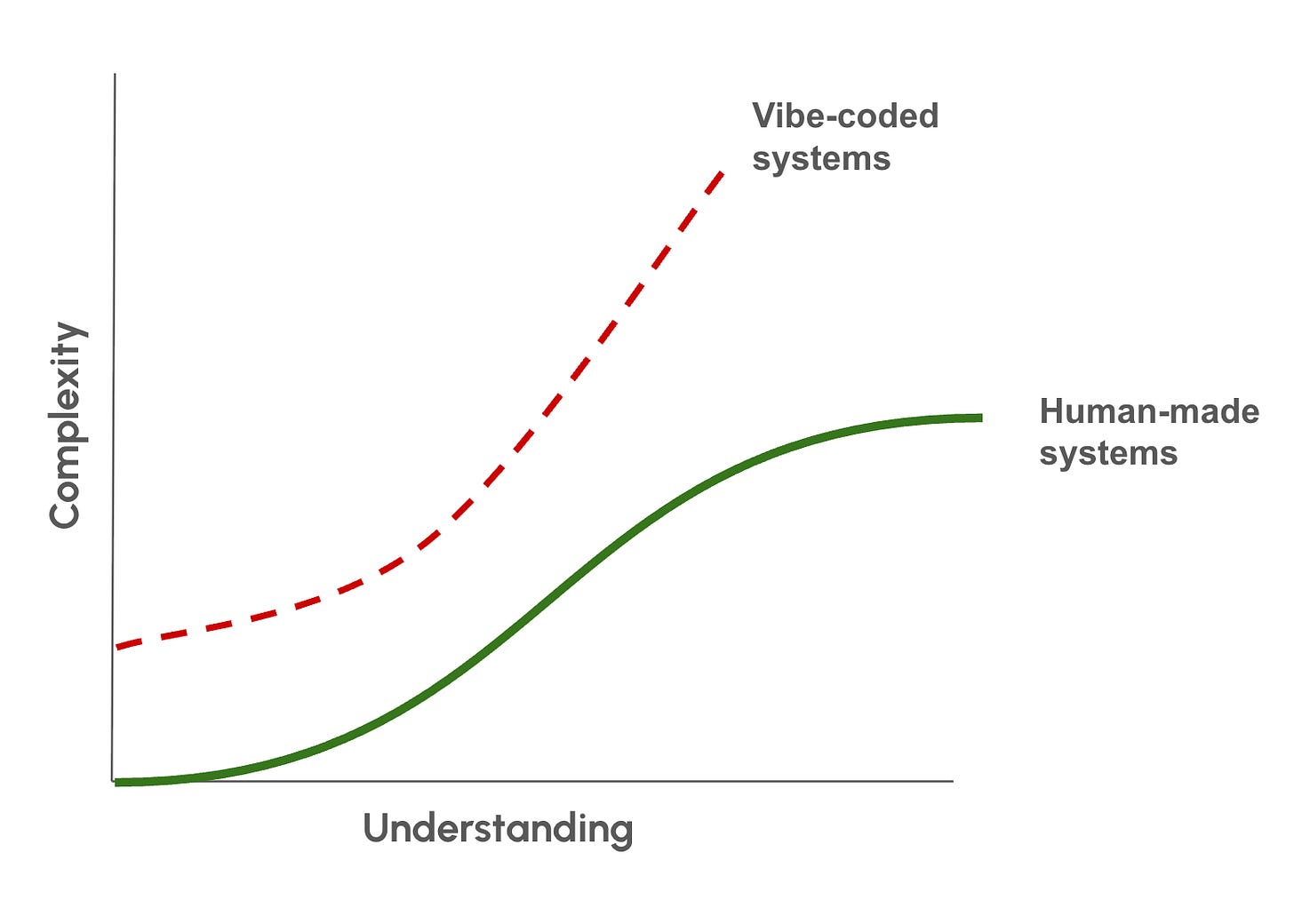

Generally, before the era of vibe coding, people created software. In many cases, it was written by engineers who (mostly) grasped, at a high level, what was happening when their code ran.2

However, vibe coding will dramatically change the ratio between understanding and complexity.

Historically, our limits on understanding acted as natural checks on the complexity we could create in human-designed systems. (Yes, interactive or emergent systems have superadditive complexity.)

Vibe coding will allow us to produce highly complex systems, but at a radically lower level of understanding.

In other words, software’s complexity is no longer constrained by our comprehension in the same way. Complexity is now super cheap.

Marching Toward Abstraction and Opacity

This isn't entirely new. Technological progress is often a march toward increasing levels of abstraction, allowing us to create complexity without fully understanding everything. Abstraction allows us to build ever more complex systems without fully needing to know or understand lower-level mechanisms: C++ compiles to assembly, which maps to logic gates, which operate through the movement of electrons…..

Without this march, we would not have the bounties of the modern world.

Vibe coding seems to be the next logical step. We are moving away from the need for humans (e.g., programmers) to be intermediaries in translating ideas into computer code. Vibe coding allows us to abstract away more than just low-level implementation; it also allows us to create abstraction layers for intent and architecture.

What happens when the systems we rely on become opaque even to their builders?

The Economic Effects of Coding Magically

Many products have become unreasonably complex over the years. They are impossible for consumers to repair. In many cases, they are too expensive and impossible even for experts to fix.

Indeed, the modern economy, in many ways, is based on the notion that such technologies cannot be repaired. Instead, when things don’t work, we replace them.

In most cases, replacing products is cheaper and easier than fixing them, not simply because they are unrepairable, but rather because the incentives of the economic system have made replacement more efficient than repair.3

Three Possible Effects of Magical Software

I wanted a framework to consider what all of this meant, and while I don't yet have a fully formed set of ideas, three things came to mind as I thought through the market forces that could shift due to this innovation.

Three things that come to mind:

Lower Barriers to Entry. The first point is that we can build quite complex things very cheaply. This will lead to a significant reduction in entry costs. You no longer need to learn coding to build systems and connect complex AI pipelines. You saw that I was able to do it with 243 words, and a “vibe” about what I wanted. Obviously, I had a baseline level of knowledge that this could be done and how to run it once completed, but this isn’t rocket science. When tasks become easier, barriers to entry are lowered, leading to increased competition.

Lower copying costs. Second, vibe coding is about first understanding the functionality you want and then being able to reverse engineer something that produces that functionality.

If I were to consider this in the context of competition, assuming there was no IP protection—and for many software products, IP protection is quite flexible anyway—I could simply look at a website, take a few screenshots, and then figure out how to reverse engineer the functionality of the software using vibe coding. In fact, I could create a bot that scours the web for new companies, learn their functionality, and then codes a product with similar functionality. I’ve also tried uploading patents and asking ChatGPT to tell me how to create a technology that has the same functionality, without violating the patent.

This means that copying costs decline dramatically for any company developing technology.

Increased variance of outcomes. Finally, the third component concerns complexity. I produce code that I do not fully understand, and I could make it even more complex without comprehending it at all. As the number of components in a system increases, the potential complexity grows exponentially due to the possible interactions among those components. Given that we don’t fully grasp each of the components or how they interact, there’s considerable uncertainty regarding the system's behavior. Outcome variance should increase dramatically.

What Might Happen to Software Markets?

We are likely to see many more players entering the software market, and exclusivity will diminish as copying becomes easier and cheaper, particularly with global coding costs decreasing. Technical skills alone won’t provide a competitive edge. At the same time, outcomes will become more unpredictable, with significant breakthroughs on one end and catastrophic failures on the other, which will probably lead us toward certification systems akin to an FDA for software to restore some trust. Additionally, since creating new software will be cheaper than repairing old systems, the market will shift toward constant replacement. Repair simply won’t be worth it anymore, except in specific edge cases.

The Fragility of Rapid Complexity

The vibe coding experience was fun. I think I’m going to build more software with it.

However, my biggest takeaway is that I can create incredibly complex working artifacts without fully understanding them.

This is both amazing and scary.

As vibe coding and AI-generated startups proliferate, we must ask ourselves what the overall consequences of this transformative technology will be.

The fact that these systems appear to function on the surface but are epistemically opaque raises a host of issues.

What are the unintended risks of scaling systems whose complexity we can't comprehend, either individually or collectively?

And do we really want systems that produce “throwaway code” or systems that can’t be fixed when they break?

My oven broke over a year ago, and I haven't had it replaced. I have better things to do with my money. I asked someone to come and repair it, and they said, 'No, you’ve got to throw it away.' I’m worried we will end up with a lot of broken ovens.

I guess that's what magic is—outcomes without understanding. Magical worlds are fine, but somewhere inside of me, it makes me feel like the last several hundred years have been geared toward removing magical thinking in favor of reason and understanding.

Are we ready to go back?

Version 1.0 - Straight out of the vibe

### Substack Post for Cluster 0

**Opening Paragraph:**

Imagine yourself in a bustling city, surrounded by intricate networks of infrastructure, each system functioning autonomously yet interdependently. This sprawling urban landscape represents the complexity of our current sociotechnical systems, where artificial intelligence (AI) has become an integral part of our daily lives, just like the electric grid or public transportation. Yet, as we increasingly rely on these AI systems, we're confronted with a paradox: the very technology that promises to improve our lives also brings with it new challenges and risks. This article explores the rise of Generative Artificial Intelligence (GenAI), a powerful new technology that is revolutionizing industries but also raising concerns about accountability, security, and education.

**TL;DR:**

Generative AI, while a promising tool for innovation, presents unique challenges due to its complexity, transparency issues, and potential security risks. Successful GenAI implementation requires a balance between innovation and governance, necessitating new frameworks for enterprise architecture management (EAM) and a focus on equipping learners with skills to engage with GenAI effectively. Meanwhile, the evolution of AI security practices and the development of AI agents with integrated cross-domain abilities are critical to harness the full potential of GenAI and mitigate its risks.

**Main Body:**

Generative AI is a rapidly evolving technology that is reshaping industries, from software development to education. Its power lies in its ability to generate high-quality outputs, such as images or text, from simple inputs. For example, visual GenAI models can create stunning images from textual prompts, offering limitless possibilities for creativity and innovation. However, the complexity of these AI systems and their opaque decision-making processes raise concerns about accountability and transparency. In a world increasingly reliant on AI, understanding the underlying mechanisms of these systems is crucial to manage potential risks and ensure responsible AI use.

On the other hand, the integration of GenAI into enterprise settings poses unique challenges. Organizations face significant obstacles in scaling GenAI, including technological complexity, governance gaps, and resource misalignments. Traditional enterprise architecture management (EAM) frameworks often fall short in addressing these challenges due to their inability to accommodate the unique requirements of GenAI. For example, balancing innovation with compliance and managing low data governance maturity are specific issues that must be tackled when adopting GenAI.

The education sector is another area where GenAI is making significant inroads. Tools like ChatGPT are revolutionizing teaching and learning, demanding a new set of skills from students and educators alike. AI literacy, critical thinking, and ethical AI practices are just some of the competencies needed to engage effectively with GenAI. But the integration of GenAI into education isn’t just about equipping learners with the right skills; it also involves reshaping curriculum design and pedagogical strategies to foster inclusive and responsible GenAI adoption.

**Model or Mental Framework:**

The complexity of GenAI can be likened to a bustling cityscape, with its intricate web of systems and networks. Just like urban planners need to understand the city's infrastructure to manage it effectively, we need a deep understanding of GenAI to harness its potential responsibly. This involves viewing GenAI not just as a tool but as an "artificially created nature," a metaphor that underscores the need for a precautionary approach to GenAI risks.

A useful model for addressing these risks is the Advanced Threat Framework for Autonomous AI Agents (ATFAA), which organizes agent-specific risks. This model helps us understand how GenAI agents' autonomy, persistent memory access, complex reasoning, and tool integration create novel security risks. Coupled with the SHIELD framework, which proposes practical mitigation strategies, we can begin to address GenAI’s unique security challenges.

In the context of education, the Aspire to Potentials for Learners (A2PL) model offers a way to cultivate Self-Directed Growth, a competency that enables learners to drive their own developmental pathways within GenAI-supported environments. This model highlights the importance of learner aspirations, complex thinking, and summative self-assessment in fostering a sustainable learning ecosystem.

**Takeaways:**

Embracing GenAI calls for a delicate balance between innovation and governance. Organizations must tailor their EAM frameworks to GenAI-specific challenges, and educational institutions should equip learners with the necessary skills to engage effectively with GenAI. As GenAI agents become more common, we must adapt our security practices to address their unique risks and continue to explore the integration of cross-domain abilities to advance toward Artificial General Intelligence (AGI).

**Closing:**

In conclusion, as we navigate the bustling cityscape of GenAI, we're faced with the challenge of ensuring that this powerful new technology serves us well without compromising our security or integrity. As we continue to explore the potential of GenAI, what new frameworks and strategies will emerge to guide us? How will we ensure that everyone, from enterprise leaders to learners, is equipped to engage with GenAI effectively? These are questions that will shape our journey through the evolving landscape of Generative Artificial Intelligence.

---

### Related Articles

- Accountability of Generative AI: Exploring a Precautionary Approach for

"Artificially Created Nature" (http://arxiv.org/abs/2505.07178v1)

- Enterprise Architecture as a Dynamic Capability for Scalable and

Sustainable Generative AI adoption: Bridging Innovation and Governance in

Large Organisations (http://arxiv.org/abs/2505.06326v1)

- Safer Prompts: Reducing IP Risk in Visual Generative AI (http://arxiv.org/abs/2505.03338v1)

- NGENT: Next-Generation AI Agents Must Integrate Multi-Domain Abilities

to Achieve Artificial General Intelligence (http://arxiv.org/abs/2504.21433v1)

- Fostering Self-Directed Growth with Generative AI: Toward a New Learning

Analytics Framework (http://arxiv.org/abs/2504.20851v1)

- Securing Agentic AI: A Comprehensive Threat Model and Mitigation

Framework for Generative AI Agents (http://arxiv.org/abs/2504.19956v2)

- Generative AI in Education: Student Skills and Lecturer Roles (http://arxiv.org/abs/2504.19673v1)

- The Role of Generative AI in Strengthening Secure Software Coding

Practices: A Systematic Perspective (http://arxiv.org/abs/2504.19461v1)

Version 1.1: With Personalizaiton

### Substack Post for Cluster 1

**Opening paragraph**

As I stroll through San Francisco, a city I call home, I can't help but notice the intricate urban layout that encapsulates its history, culture, and aspirations. The serpentine roads, the Victorian houses, and the steel-and-glass skyscrapers all speak to the city's past and future. Each architectural detail, each junction, and each open space is a testament to meticulous urban planning. But with the dawn of the AI era, we are witnessing a paradigm shift in how cities are designed and planned. Could generative AI redefine urban planning, and what are the implications for society at large?

**TL;DR**

Generative AI models are opening new avenues in urban planning, synthetic data generation, and complex data structuring. These models offer the capacity to automate traditionally manual processes, enhance the quality of generated data, and facilitate a more efficient transfer of unstructured to structured data. However, this cutting-edge technology also brings forth challenges, including data quality assessment, potential misuse, and intellectual property infringement. This post explores these ideas in depth, providing a comprehensive overview of how generative AI is transforming multiple sectors while highlighting the need for robust frameworks to ensure ethical and responsible usage.

**Main body**

Urban planning, traditionally a manual and labor-intensive process, is witnessing a technological revolution with the advent of generative AI. For instance, a recent study used a state-of-the-art Stable Diffusion model augmented with ControlNet to generate high-fidelity satellite imagery based on land use descriptions and natural environments. By linking satellite imagery with structured land use information from OpenStreetMap, the researchers were able to generate realistic and diverse urban landscapes. The model proved effective across diverse urban contexts, opening the door to a future where AI could play a pivotal role in urban planning, enhancing planning workflows and public engagement.

Similarly, generative AI models have made significant strides in the realm of smart grids. These models can generate large amounts of synthetic data that would otherwise be difficult to obtain due to confidentiality constraints. The challenge, however, lies in assessing the quality of data produced by these generative models. To address this, a novel metric based on Fréchet Distance (FD) can evaluate the quality of the synthetic datasets from a distributional perspective. This approach enhances the reliability of data-driven decision-making in smart grid operations.

Generative AI models are also transforming the way text data is structured. A hybrid text-mining framework converts raw, unstructured scientific text into structured data, enhancing entity recognition performance by up to 58% and improving the quality of the final structured data by up to 83%. This advancement could significantly expand the scope of data-driven scientific discovery.

Despite these promising developments, the rise of generative AI has also raised concerns, especially in the realm of visual content generation. Open-source text-to-image (TTI) pipelines, while providing creative opportunities, also facilitate harmful practices such as the generation of non-consensual deepfakes and the amplification of misogynistic or hypersexualized content. Similarly, AI-generated content risks infringing on intellectual property rights due to the potential for models to memorize and reproduce specific content.

**Model or mental framework**

To understand the impact of generative AI, we can conceptualize it as a powerful tool in a craftsman's workshop. Just as a hammer can be used to build a house or break a window, generative AI can be used for beneficial or harmful purposes. The key lies in the craftsman's intent and the controls in place to ensure responsible use.

For generative AI to deliver its full potential, we need robust frameworks to govern its use. These frameworks must balance the need for creativity and efficiency with considerations of ethics, privacy, and intellectual property rights. They should include mechanisms to assess the quality of generated data, safeguards against misuse, and measures to ensure intellectual property rights are respected.

**Takeaways**

Generative AI is revolutionizing urban planning, data structuring, and synthetic data generation. It offers the potential to automate traditionally manual tasks, enhance the quality of generated data, and facilitate efficient data structuring. However, it also raises considerable challenges, including data quality assessment, potential misuse, and intellectual property infringement.

To navigate these challenges, we need robust, comprehensive frameworks to govern the use of generative AI. These frameworks should balance the need for creativity and efficiency with considerations of ethics, privacy, and intellectual property rights. They should include mechanisms to assess the quality of generated data, safeguards against misuse, and measures to ensure intellectual property rights are respected.

**Closing**

As generative AI continues to evolve and its applications continue to expand, how will we ensure that this powerful tool is used for the benefit of all? The answer lies not only in the development of advanced models but also in the creation of robust ethical, legal, and regulatory frameworks that guide their use. As we stand on the cusp of this new era, we must ask ourselves: How can we shape the future of generative AI to ensure it serves as a tool for progress rather than a source of harm?

---

### Related Articles

- Generative AI for Urban Planning: Synthesizing Satellite Imagery via

Diffusion Models (http://arxiv.org/abs/2505.08833v1)

- Fréchet Power-Scenario Distance: A Metric for Evaluating Generative

AI Models across Multiple Time-Scales in Smart Grids (http://arxiv.org/abs/2505.08082v1)

- Symbol-based entity marker highlighting for enhanced text mining in

materials science with generative AI (http://arxiv.org/abs/2505.05864v1)

- Perpetuating Misogyny with Generative AI: How Model Personalization

Normalizes Gendered Harm (http://arxiv.org/abs/2505.04600v1)

- Safer Prompts: Reducing IP Risk in Visual Generative AI (http://arxiv.org/abs/2505.03338v1)

- Ensuring Reproducibility in Generative AI Systems for General Use Cases:

A Framework for Regression Testing and Open Datasets (http://arxiv.org/abs/2505.02854v1)

- Leveraging Generative AI Through Prompt Engineering and Rigorous

Validation to Create Comprehensive Synthetic Datasets for AI Training in

Healthcare (http://arxiv.org/abs/2504.20921v1)

Version 1.2 With an Upgraded Model

### Substack Post for Cluster 0

**Opening paragraph**

Last spring, I found myself in a small conference room at a community center in rural Virginia, listening to a group of farmers debate whether ultraviolet light could cure COVID-19. I’d been invited to speak about information flows in crisis moments, but the talk evolved into an impromptu fact-checking workshop: we sifted through Facebook posts, dissected forwarded audio clips, and traced dubious medical claims back to satirical news sites. One farmer confided he’d always trusted what a neighbor shared on WhatsApp. When I explained how generative AI can spin entirely new, but patently false, stories at the click of a button, his eyes widened. We’d crossed a threshold: misinformation had ceased to be an accident of rumor; it was now a technologically amplified phenomenon, blurring the line between honest mistake and deliberate manipulation.

That afternoon, as I packed my notes, I realized this challenge sat at the intersection of two great forces I study—technological innovation and organizational response. On one hand, generative artificial intelligence (genAI) offers the promise of accelerating discovery, unleashing creativity, and redesigning how we work. On the other, it supercharges the spread of “fake news,” a problem as old as Gutenberg’s press but newly weaponized at global scale. How, I wondered, do we harness the power of genAI without becoming unwitting distributors of falsehood?

Back at my desk, I dove into two strands of research. The first traced the legislative reaction to misinformation: who writes these laws, why they emerge, and whether they help or harm. The second examined genAI not just as a clever trick but as a genuine economic force—a general-purpose technology (GPT) that powers knock-on innovations, and even as an invention of methods of invention (IMI) that turbocharges R&D. What struck me was how little integration existed between these two literatures: we talk about regulating speech or debating productivity gains, but rarely about the dynamic feedback loop that ties them together. This essay is my attempt to stitch those threads into a coherent picture.

**TL;DR**

Generative AI sits at a crossroads: it is poised to become a transformative general-purpose technology that accelerates innovation, yet it also magnifies the age-old problem of misinformation.

Recent legislative efforts against “fake news” have gone from illiberal regimes to Western democracies, focusing on national security and public health but differing in zeal and scope according to political freedom.

To navigate this new terrain, we need a simple mental framework that captures the interplay between genAI’s innovation boons (GPT + IMI) and the evolving architectures of regulation—public laws, platform policies, and decentralized norms.

**Main body**

1. The ubiquity of misinformation predates the internet: medieval pamphleteers, yellow journalists, radio bonanzas of propaganda. What’s changed is scale and speed. Today, a doctored video of a political candidate can circulate globally within minutes. We’ve watched disinformation shape elections, sow mistrust in vaccines, and even trigger violence. The conventional playbook—from editorial gatekeepers to fact-checking NGOs—struggles under the weight of billions of real-time posts. Meanwhile, legislative bodies scramble to catch up, drafting laws that define “mal-information” and prescribe penalties for genuine or unintentional offenders. My own fieldwork in Southeast Asia found that the first wave of these laws emerged in countries with weaker civil liberties and lower GDP per capita—places where governments faced fewer constraints on speech and greater incentives to control narratives.

2. Then came the pandemic. In 2020 alone, over a hundred new statutes or regulations targeted misinformation about public health. Suddenly, the stakes were life and death: unverified herbal cures, conspiracy theories about 5G towers spreading the virus, or outright denialism that slowed vaccine uptake. Governments invoked emergency powers; platforms accelerated their content moderation algorithms; civil society groups published daily myth-busting bulletins. But the rush to legislate revealed deep tensions. Hard-line measures risked chilling legitimate speech, while softer “notice and takedown” rules often lagged behind rapidly mutating falsehoods. The result was a patchwork of responses—some draconian, others toothless—hardly the unified front needed to stem a truly global infodemic.

3. Meanwhile, genAI quietly matured. Early chatbots and vision models made headlines, but few realized these systems would soon sit at the heart of both productivity engines and information manipulation tools. Economists distinguish two crucial categories of transformative technology. General-purpose technologies (GPTs) like electricity or the steam engine spark waves of complementary innovations—new services, reorganized industries, and sustained growth. Invention of methods of invention (IMIs) such as the high-throughput microscope or combinatorial chemistry speeds up R&D itself. Remarkably, genAI ticks both boxes: it can write code, design experiments, and even generate synthetic data to train successor models.

4. What does this dual identity imply? On the upside, genAI can help us detect disinformation by automating content verification, flagging deepfakes, and surfacing the provenance of images or texts. Imagine a newsroom where AI agents scan every incoming claim, cross-reference it with primary sources, and highlight inconsistencies. Research labs could deploy genAI to sift through mountains of academic papers, identify replication failures, and propose novel experimental hypotheses. Productivity growth might get a new lease on life after a decade of plateauing by reducing the “friction” in human-machine collaboration.

5. Yet the very generativity that drives these gains also fuels the spread of falsehood. An open-source genAI model can write convincing political satire that blurs into disinformation. It can churn out thousands of phishing emails, scaled misinformation campaigns, or bot-driven comment floods. Manual fact-checking can’t keep pace; automated filters often struggle to distinguish genuine from AI-generated false claims, especially when malicious actors fine-tune models for stealth. In effect, genAI intensifies the arms race between detection and deception, manufacture and verification.

6. The policy response has entered a new phase. What began in authoritarian contexts has now swept into Western democracies. Legislators debate obligations for transparency—requiring companies to watermark AI-generated content or disclose model training data. Platform liability is back on the table, with proposals to hold social media companies accountable for algorithmic amplification of false material. At the international level, conversations range from UNESCO’s “Recommendation on the Ethics of AI” to OECD guidelines on digital content governance. The question is no longer whether to regulate “lawful but awful” speech, but how to design systems that balance free expression with societal resilience.

7. These debates mirror earlier technology cycles. The industrial revolution prompted factory safety laws as steam engines powered textile looms; radio deregulation in the 1980s unfolded alongside new content standards. What’s different now is velocity: regulation, innovation, and counter-innovation unfold in tandem, often outpacing legislators’ ability to fully comprehend emerging risks or opportunities. A parliamentary committee might take years to pass an AI transparency bill, by which time a new class of private foundation models has slipped through loopholes.

8. To bring clarity to this chaos, we must zoom out and look at the information economy as an evolving ecosystem. GenAI is a catalyst, reshaping the terrain with twin blades of productivity enhancement and misinformation amplification. Our goal should be to erect adaptive institution sandboxes—dynamic regulations, modular policy toolkits, and real-time monitoring dashboards that evolve with the technology. Such hybrid approaches combine top-down mandates (e.g., watermarking requirements) with bottom-up community standards (open-source developer norms) and market signals (liability insurance for platforms).

**Model or mental framework**

Imagine a two-dimensional map where the horizontal axis captures **Technological Generativity** (from low to high) and the vertical axis measures **Regulatory Flexibility** (from rigid to adaptive). On the left, low generativity technologies—say, basic word-processing software—pose minimal misinformation risk, so rigid regulation incurs high compliance costs for little gain. On the right, high generativity technologies—cutting-edge genAI engines—can spawn both innovation and disinformation at scale, demanding more nuanced governance.

In the top-right quadrant, **Adaptive Regulation + High Generativity**, we see fertile ground for experimentation: flexible rulebooks that update in real time, collaborative governance boards of regulators, industry, and civil society, and continuous evaluation loops. This is where genAI’s productivity benefits outweigh oversight costs, and where regulation can shape innovation trajectories—steering models towards ethical design and transparent deployment.

Conversely, the bottom-right quadrant, **Rigid Regulation + High Generativity**, risks either stifling innovation (if rules are too burdensome) or failing to curb misuse (if they’re quickly evaded). Past examples include broad “data localization” laws that drive AI talent offshore or sweeping bans on encrypted messaging that undermine privacy without halting bad actors. Our task, then, is to navigate toward the top-right: calibrating regulatory interventions to match genAI’s generative power, employing sunset clauses, impact assessments, and co-regulatory frameworks.

**Takeaways**

• Generative AI is both a general-purpose technology and an invention of methods of invention. That dual nature makes it a powerful engine for productivity growth—but also a supercharger for misinformation. Understanding this duality is key to designing effective responses.

• Global legislative efforts against “fake news” have spilled from illiberal states into mature democracies, driven by threats to national security, public health, and electoral integrity. Yet the shape and effectiveness of these laws hinge on each country’s legal traditions, technological capacity, and political norms.

• We need dynamic, layered governance architectures that blend top-down laws with bottom-up norms and market incentives. A simple 2×2 framework—mapping technological generativity against regulatory flexibility—can guide us toward adaptive regimes that foster innovation while safeguarding informational integrity.

**Closing**

As I left that rural hall in Virginia, I reflected on one farmer’s parting question: “Can we trust the next headline we read online?” There’s no simple yes or no. Trust hinges on how we shape the engines beneath the surface—both the algorithms that craft narratives and the laws that police them.

In my next essay, I’ll unpack one of those layers—how open-source communities can co-govern public AI models, setting technical standards that preempt misuse without stifling creativity. Because if there’s one thing I’ve learned in ten years of field experiments, it’s that governance works best when it is as generative and adaptive as the technologies it seeks to channel.

For now, I leave you with this: in a world where machines can both invent cures and concoct conspiracies, what institutional innovations will we need to ensure that truth retains the upper hand? Over to you.

---

### Related Articles

- Lawful but Awful: Evolving Legislative Responses to Address Online

Misinformation, Disinformation, and Mal-Information in the Age of Generative

AI (http://arxiv.org/abs/2505.15067v1)

- Generative AI at the Crossroads: Light Bulb, Dynamo, or Microscope? (http://arxiv.org/abs/2505.14588v1)

Final Code

pip install requests pandas scikit-learn openai beautifulsoup4

import requests

import pandas as pd

from bs4 import BeautifulSoup

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.cluster import KMeans

import openai

# ========= PERSONALIZATION =========

def personalize_voice(base_prompt: str, personality_descriptor: str = None) -> str:

"""Injects a personal writing voice into the base Substack prompt."""

default_descriptor = (

"You are Sharique Hasan, a professor of strategy and sociology who blends deep academic rigor "

"with accessible storytelling. Your writing style is reflective, often drawing from personal experience, "

"field experiments, and empirical work to illustrate insights. You connect theory to practice with clarity, "

"emphasizing real-world implications, especially in entrepreneurship, innovation, and social networks."

)

descriptor = personality_descriptor if personality_descriptor else default_descriptor

personalized_prompt = base_prompt.replace(

"You are a Substack writer specializing in",

f"{descriptor}\n\nYou are writing a Substack article specializing in"

)

return personalized_prompt

# ========= PROMPT GENERATION =========

def format_substack_prompt(cluster_id, summaries, topic="innovation and organization"):

return (

f"You are a Substack writer specializing in {topic}.\n\n"

f"Your goal is to write a thoughtful and engaging article based on the following cluster of ideas: {cluster_id}.\n"

f"Use the provided article summaries below as your source material. Boil the ideas down to an essential argument that is both intuitive and powerful.\n\n"

f"Structure the post in this format:\n"

f"- **Opening paragraph**: Personal, reflective, or story-driven context.\n"

f"- **TL;DR**: A short summary of the core idea or insight.\n"

f"- **Main body**: Develop the argument through clear examples, often comparing states (e.g., past vs. future, manual vs. automated).\n"

f"- **Model or mental framework**: Provide a simple analytical tool, metaphor, or economic model that explains the core transition or mechanism.\n"

f"- **Takeaways**: What should readers remember or apply?\n"

f"- **Closing**: End with a question or insight that sets up a future post or reflection.\n\n"

f"Your tone should be clear, reflective, and idea-driven—similar to a well-written essay on Substack that combines storytelling, conceptual depth, and actionable insight.\n\n"

f"---\n\n"

f"{summaries}\n\n"

f"---\n\n"

f"Now, write the article in full, following the format above. Ensure that each section has at least 3 paragraphs. The total post should be 3000 words."

)

# ========= ARXIV FETCH =========

def fetch_arxiv_articles(query="generative ai", max_results=100):

url = f"http://export.arxiv.org/api/query?search_query=ti:\"{query}\"&sortBy=submittedDate&sortOrder=descending&max_results={max_results}"

response = requests.get(url)

soup = BeautifulSoup(response.content, 'xml')

entries = soup.find_all('entry')

articles = []

for entry in entries:

articles.append({

'title': entry.title.text.strip(),

'summary': entry.summary.text.strip(),

'link': entry.id.text.strip()

})

return pd.DataFrame(articles)

# ========= CSV OUTPUT =========

def save_to_csv(df, filename='arxiv_articles.csv'):

df.to_csv(filename, index=False)

# ========= CLUSTERING =========

def cluster_articles(df, n_clusters=5):

if len(df) < n_clusters:

print(f"Warning: Only {len(df)} articles. Skipping clustering.")

df['cluster'] = 0

return df

vectorizer = TfidfVectorizer(stop_words='english', max_features=1000)

X = vectorizer.fit_transform(df['summary'])

model = KMeans(n_clusters=n_clusters, random_state=42)

df['cluster'] = model.fit_predict(X)

return df

# # ========= GENERATE BLOG POST =========

# def generate_post_ideas(summaries, cluster_id, openai_client):

# base_prompt = format_substack_prompt(cluster_id, summaries)

# prompt = personalize_voice(base_prompt)

# response = openai_client.chat.completions.create(

# model="gpt-4",

# messages=[{"role": "user", "content": prompt}],

# temperature=0.7

# )

# return response.choices[0].message.content

# ========= GENERATE BLOG POST =========

def generate_post_ideas(summaries, cluster_id, openai_client):

base_prompt = format_substack_prompt(cluster_id, summaries)

prompt = personalize_voice(base_prompt)

response = openai_client.chat.completions.create(

model="o4-mini-2025-04-16", # Updated to latest best model

messages=[{"role": "user", "content": prompt}],

temperature=1

)

return response.choices[0].message.content

# ========= FILE OUTPUT =========

def save_cluster_ideas(df, openai_client, base_filename="substack_ideas_cluster"):

grouped = df.groupby("cluster")

for cluster_id, group in grouped:

summaries = "\n".join(group['summary'].tolist())

references = "\n".join(f"- {row['title']} ({row['link']})" for _, row in group.iterrows())

ideas = generate_post_ideas(summaries, cluster_id, openai_client)

output_text = (

f"### Substack Post for Cluster {cluster_id}\n\n"

f"{ideas}\n\n"

f"---\n\n"

f"### Related Articles\n{references}"

)

with open(f"{base_filename}_{cluster_id}.txt", "w", encoding="utf-8") as f:

f.write(output_text)

# ========= MAIN =========

if __name__ == "__main__":

OPENAI_API_KEY = "API KEY GOES HERE"

client = openai.OpenAI(api_key=OPENAI_API_KEY)

print("📡 Fetching arXiv articles...")

articles_df = fetch_arxiv_articles("generative ai", max_results=30)

print("💾 Saving articles to CSV...")

save_to_csv(articles_df, "arxiv_articles.csv")

print("🧠 Clustering articles...")

clustered_df = cluster_articles(articles_df)

print("📝 Generating Substack posts...")

save_cluster_ideas(clustered_df, client)

print("✅ All tasks completed.")

Frankly, who knows whether the research was AI generated or not.

Yes, individuals would use code snippets here and there and modular components that they would call through APIs, which made technologies much more complex (without understanding), perhaps more complex than any individual could comprehend.

This dynamic may change with the introduction of tariffs, but it could also lead to the development of less complex technologies.